Introduction

Chatbots have reworked the way in which we interact with expertise, enabling automated, clever conversations throughout varied domains. Constructing these chat programs could be difficult, particularly when aiming for flexibility and scalability. AutoGen simplifies this course of by leveraging AI brokers, which deal with complicated dialogues and duties autonomously. On this article, we’ll discover the best way to construct agentic chatbots utilizing AutoGen. We are going to discover its highly effective agent-based framework that makes creating adaptive, clever conversational bots simpler than ever.

Overview

- Be taught what the AutoGen framework is all about and what it might do.

- See how one can create chatbots that may maintain discussions with one another, reply to human queries, search the net, and do much more.

- Know the setup necessities and conditions wanted for constructing agentic chatbots utilizing AutoGen.

- Learn to improve chatbots by integrating instruments like Tavily for net searches.

What’s AutoGen?

In AutoGen, all interactions are modelled as conversations between brokers. This agent-to-agent, chat-based communication streamlines the workflow, making it intuitive to start out constructing chatbots. The framework additionally affords flexibility by supporting varied dialog patterns akin to sequential chats, group chats, and extra.

Let’s discover the AutoGen chatbot capabilities as we construct several types of chatbots:

- Dialectic between brokers: Two specialists in a subject talk about a subject and attempt to resolve their contradictions.

- Interview preparation chatbot: We are going to use an agent to organize for the interview by asking questions and evaluating the solutions.

- Chat with Internet search software: We will chat with a search software to get any info from the net.

Be taught Extra: Autogen: Exploring the Fundamentals of a Multi-Agent Framework

Conditions

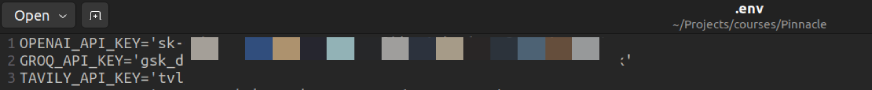

Earlier than constructing AutoGen brokers, guarantee you may have the required API keys for LLMs. We can even use Tavily to look the net.

Accessing by way of API

On this article, we’re utilizing OpenAI and Groq API keys. Groq affords entry to many open-source LLMs at no cost as much as some charge limits.

We will use any LLM we want. Begin by producing an API key for the LLM and Tavily search software.

Create a .env file to securely retailer this key, retaining it personal whereas making it simply accessible inside your venture.

Libraries Required

autogen-agentchat – 0.2.36

tavily-python – 0.5.0

groq – 0.7.0

openai – 1.46.0

Dialectic Between Brokers

Dialectic is a technique of argumentation or reasoning that seeks to discover and resolve contradictions or opposing viewpoints. We let the 2 LLMs take part within the dialectic utilizing AutoGen brokers.

Let’s create our first agent:

from autogen import ConversableAgent

agent_1 = ConversableAgent(

identify="expert_1",

system_message="""You're taking part in a Dialectic about considerations of Generative AI with one other knowledgeable.

Make your factors on the thesis concisely.""",

llm_config={"config_list": [{"model": "gpt-4o-mini", "temperature": 0.5}]},

code_execution_config=False,

human_input_mode="NEVER",

)Code Clarification

- ConversableAgent: That is the bottom class for constructing customizable brokers that may discuss and work together with different brokers, folks, and instruments to unravel duties.

- System Message: The system_message parameter defines the agent’s position and objective within the dialog. On this case, agent_1 is instructed to interact in a dialectic about generative AI, making concise factors on the thesis.

- llm_config: This configuration specifies the language mannequin for use, right here “gpt-4o-mini”. Further parameters like temperature=0.5 are set to manage the mannequin’s response creativity and variability.

- code_execution_config=False: This means that no code execution capabilities are enabled for the agent.

- human_input_mode=”NEVER”: This setting ensures the agent doesn’t depend on human enter, working fully autonomously.

Now the second agent

agent_2 = ConversableAgent(

"expert_2",

system_message="""You're taking part in a Dialectic about considerations of Generative AI with one other knowledgeable. Make your factors on the anti-thesis concisely.""",

llm_config={"config_list": [{"api_type": "groq", "model": "llama-3.1-70b-versatile", "temperature": 0.3}]},

code_execution_config=False,

human_input_mode="NEVER",

)Right here, we are going to use the Llama 3.1 mannequin from Groq. To know the best way to set totally different LLMs, we will refer right here.

Allow us to provoke the chat:

end result = agent_1.initiate_chat(agent_2, message="""The character of knowledge assortment for coaching AI fashions pose inherent privateness dangers""",

max_turns=3, silent=False, summary_method="reflection_with_llm")Code Clarification

On this code, agent_1 initiates a dialog with agent_2 utilizing the supplied message.

- max_turns=3: This limits the dialog to 3 exchanges between the brokers earlier than it robotically ends.

- silent=False: This may show the dialog in real-time.

- summary_method=’reflection_with_llm’: This employs a big language mannequin (LLM) to summarize all the dialogue between the brokers after the dialog concludes, offering a reflective abstract of their interplay.

You’ll be able to undergo all the dialectic utilizing the chat_history technique.

Right here’s the end result:

len(end result.chat_history)

>>> 6

# every agent has 3 replies.

# we will additionally verify the associated fee incurred

print(end result.value)

# get chathistory

print(end result.chat_history)

# lastly abstract of the chat

print(end result.abstract['content'])

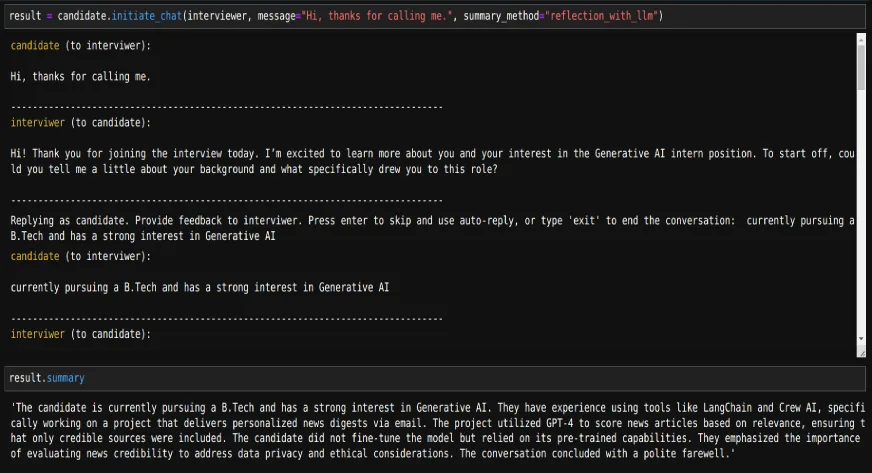

Interview Preparation Chatbot

Along with making two brokers chat amongst themselves, we will additionally chat with an AI agent. Let’s do that by constructing an agent that can be utilized for interview preparation.

interviewer = ConversableAgent(

"interviewer",

system_message="""You're interviewing to pick out for the Generative AI intern place.

Ask appropriate questions and consider the candidate.""",

llm_config={"config_list": [{"api_type": "groq", "model": "llama-3.1-70b-versatile", "temperature": 0.0}]},

code_execution_config=False,

human_input_mode="NEVER",

# max_consecutive_auto_reply=2,

is_termination_msg=lambda msg: "goodbye" in msg["content"].decrease()

)Code Clarification

Use the system_message to outline the position of the agent.

To terminate the dialog we will use both of the under two parameters:

- max_consecutive_auto_reply: This parameter limits the variety of consecutive replies an agent can ship. As soon as the agent reaches this restrict, the dialog robotically ends, stopping it from persevering with indefinitely.

- is_termination_msg: This parameter checks if a message incorporates a particular pre-defined key phrase. When this key phrase is detected, the dialog is robotically terminated.

candidate = ConversableAgent(

"candidate",

system_message="""You're attending an interview for the Generative AI intern place.

Reply the questions accordingly""",

llm_config=False,

code_execution_config=False,

human_input_mode="ALWAYS",

)For the reason that consumer goes to supply the reply, we are going to use human_input_mode=”ALWAYS” and llm_config=False

Now, we will initialize the mock interview:

end result = candidate.initiate_chat(interviewer, message="Hello, thanks for calling me.", summary_method="reflection_with_llm")

# we will get the abstract of the dialog too

print(end result.abstract)

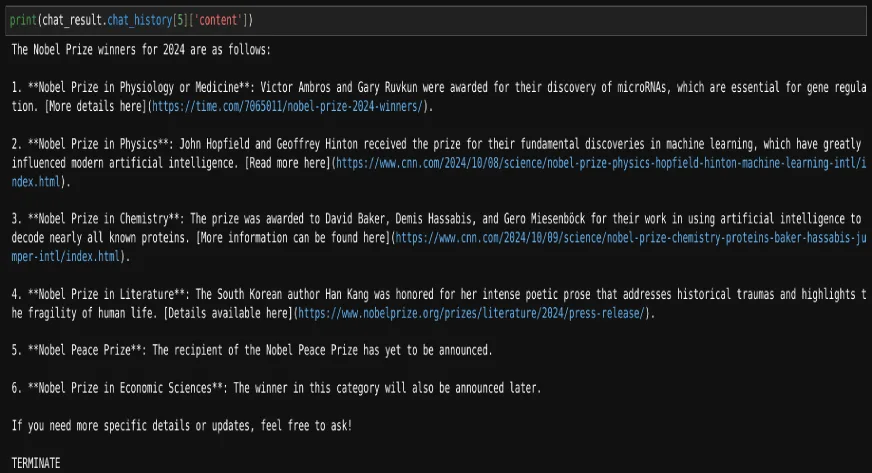

Chat with Internet Search

Now, let’s construct a chatbot that may use the web to seek for the queries requested.

For this, first, outline a perform that searches the net utilizing Tavily.

from tavily import TavilyClient

from autogen import register_function

def web_search(question: str):

tavily_client = TavilyClient()

response = tavily_client.search(question, max_results=3)

return response['results']An assistant agent which decides to name the software or terminate

assistant = ConversableAgent(

identify="Assistant",

system_message="""You're a useful AI assistant. You'll be able to search net to get the outcomes.

Return 'TERMINATE' when the duty is finished.""",

llm_config={"config_list": [{"model": "gpt-4o-mini"}]},

silent=True,

)The consumer proxy agent is used for interacting with the assistant agent and executes software calls.

user_proxy = ConversableAgent(

identify="Consumer",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content material") just isn't None and "TERMINATE" in msg["content"],

human_input_mode="TERMINATE",

)When the termination situation is met, it’s going to ask for human enter. We will both proceed to question or finish the chat.

Register the perform for the 2 brokers:

register_function(

web_search,

caller=assistant, # The assistant agent can recommend calls to the calculator.

executor=user_proxy, # The consumer proxy agent can execute the calculator calls.

identify="web_search", # By default, the perform identify is used because the software identify.

description="Searches web to get the outcomes a for given question", # An outline of the software.

)Now we will question:

chat_result = user_proxy.initiate_chat(assistant, message="Who received the Nobel prizes in 2024")

# Relying on the size of the chat historical past we will entry the required content material

print(chat_result.chat_history[5]['content'])

On this means, we will construct several types of agentic chatbots utilizing AutoGen.

Additionally Learn: Strategic Staff Constructing with AutoGen AI

Conclusion

On this article, we discovered the best way to construct agentic chatbots utilizing AutoGen and explored their varied capabilities. With its agent-based structure, builders can construct versatile and scalable bots able to complicated interactions, akin to dialectics and net searches. AutoGen’s easy setup and gear integration empower customers to craft custom-made conversational brokers for varied functions. As AI-driven communication evolves, AutoGen serves as a priceless framework for simplifying and enhancing chatbot improvement, enabling participating consumer interactions.

To grasp AI brokers, checkout our Agentic AI Pioneer Program.

Often Requested Questions

A. AutoGen is a framework that simplifies the event of chatbots by utilizing an agent-based structure, permitting for versatile and scalable conversational interactions.

A. Sure, AutoGen helps varied dialog patterns, together with sequential and group chats, permitting builders to tailor interactions primarily based on their wants.

A. AutoGen makes use of agent-to-agent communication, enabling a number of brokers to interact in structured dialogues, akin to dialectics, making it simpler to handle complicated conversational eventualities.

A. You’ll be able to terminate a chat in AutoGen by utilizing parameters like `max_consecutive_auto_reply`, which limits the variety of consecutive replies, or `is_termination_msg`, which checks for particular key phrases within the dialog to set off an computerized finish. We will additionally use max_turns to restrict the dialog.

A. Auogen permits brokers to make use of exterior instruments, like Tavily for net searches, by registering features that the brokers can name throughout conversations, enhancing the chatbot’s capabilities with real-time knowledge and extra performance.