We’re excited to announce that Databricks now helps Amazon EC2 G6 cases powered by NVIDIA L4 Tensor Core GPUs. This addition marks a step ahead in enabling extra environment friendly and scalable knowledge processing, machine studying, and AI workloads on the Databricks Information Intelligence Platform.

Why AWS G6 GPU Situations?

Amazon Net Companies (AWS) G6 cases are powered by lower-cost, energy-efficient NVIDIA L4 GPUs. Primarily based on NVIDIA’s 4th gen tensor core Ada Lovelace structure, these GPUs provide help for probably the most demanding AI and machine studying workloads:

- G6 cases ship as much as 2x increased efficiency for deep studying inference and graphics workloads in comparison with G4dn cases that run on NVIDIA T4 GPUs.

- G6 cases have twice the compute energy however require solely half the reminiscence bandwidth of G5 cases powered by NVIDIA A10G Tensor Core GPUs. (Notice: Most LLM and different autoregressive transformer mannequin inference tends to be memory-bound, that means that the A10G should still be a more sensible choice for purposes equivalent to chat, however the L4 is performance-optimized for inference on compute-bound workloads.

Use Instances: Accelerating Your AI and Machine Studying Workflows

- Deep Studying inference: The L4 GPU is optimized for batch inference workloads, offering a stability between excessive computational energy and vitality effectivity. It provides wonderful help for TensorRT and different inference-optimized libraries, which assist scale back latency and increase throughput in purposes like laptop imaginative and prescient, pure language processing, and suggestion methods.

- Picture and audio preprocessing: The L4 GPU excels in parallel processing, which is essential for data-intensive duties like picture and audio preprocessing. For instance, picture or video decoding and transformations will profit from the GPUs.

- Coaching for deep studying fashions: L4 GPU is very environment friendly for coaching comparatively smaller-sized deep studying fashions with fewer parameters (lower than 1B)

How one can Get Began

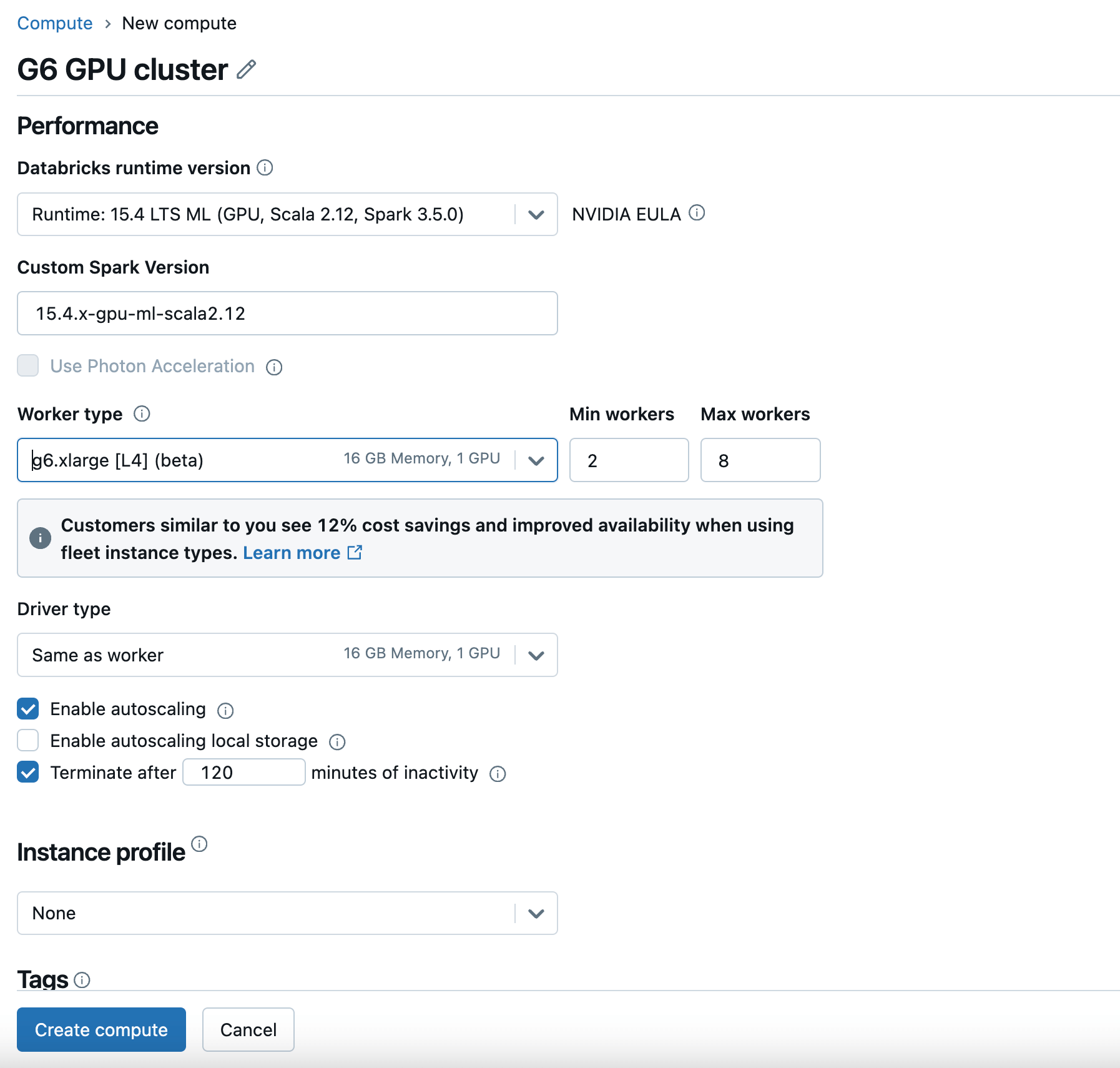

To begin utilizing G6 GPU cases on Databricks, merely create a brand new compute with a GPU-enabled Databricks Runtime Model and select G6 because the Employee Sort and Driver Sort. For particulars, verify the Databricks documentation.

G6 cases can be found now within the AWS US East (N. Virginia and Ohio) and US West (Oregon) areas. You could verify the AWS documentation for extra accessible areas sooner or later.

Wanting Forward

The addition of G6 GPU help on AWS is among the many steps we’re taking to make sure that Databricks stays on the forefront of AI and knowledge analytics innovation. We acknowledge that our prospects are desperate to benefit from cutting-edge platform capabilities and acquire insights from their proprietary knowledge. We are going to proceed to help extra GPU occasion sorts, equivalent to Gr6 and P5e cases, and extra GPU sorts, like AMD. Our objective is to help AI compute improvements as they turn into accessible to our prospects.

Conclusion

Whether or not you’re a researcher who needs to coach DL fashions like suggestion methods, a knowledge scientist who needs to run DL batch inferences along with your knowledge from UC, or a knowledge engineer who needs to course of your video and audio knowledge, this newest integration ensures that Databricks continues to supply a sturdy, future-ready platform for all of your knowledge and AI wants.

Get began as we speak and expertise the subsequent degree of efficiency on your knowledge and machine studying workloads on Databricks.